PDF Publication Title:

Text from PDF Page: 003

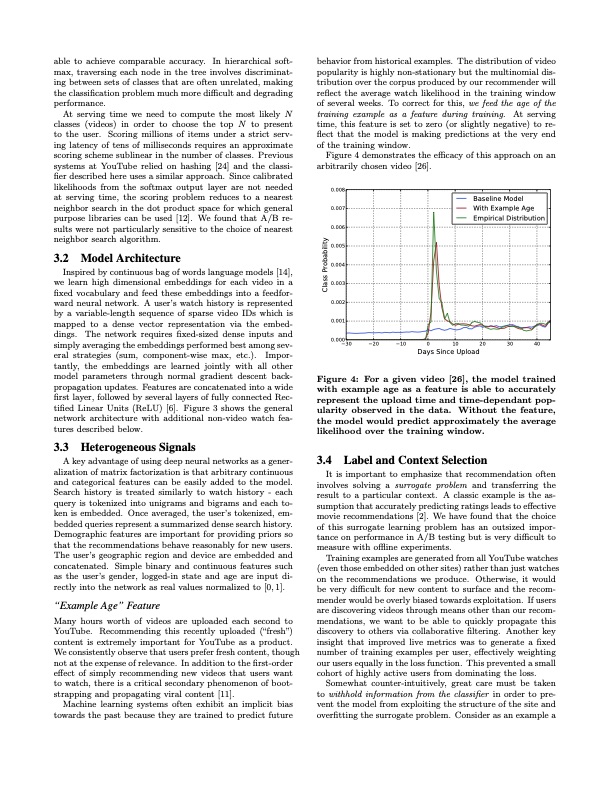

able to achieve comparable accuracy. In hierarchical soft- max, traversing each node in the tree involves discriminat- ing between sets of classes that are often unrelated, making the classification problem much more difficult and degrading performance. At serving time we need to compute the most likely N classes (videos) in order to choose the top N to present to the user. Scoring millions of items under a strict serv- ing latency of tens of milliseconds requires an approximate scoring scheme sublinear in the number of classes. Previous systems at YouTube relied on hashing [24] and the classi- fier described here uses a similar approach. Since calibrated likelihoods from the softmax output layer are not needed at serving time, the scoring problem reduces to a nearest neighbor search in the dot product space for which general purpose libraries can be used [12]. We found that A/B re- sults were not particularly sensitive to the choice of nearest neighbor search algorithm. 3.2 Model Architecture Inspired by continuous bag of words language models [14], we learn high dimensional embeddings for each video in a fixed vocabulary and feed these embeddings into a feedfor- ward neural network. A user’s watch history is represented by a variable-length sequence of sparse video IDs which is mapped to a dense vector representation via the embed- dings. The network requires fixed-sized dense inputs and simply averaging the embeddings performed best among sev- eral strategies (sum, component-wise max, etc.). Impor- tantly, the embeddings are learned jointly with all other model parameters through normal gradient descent back- propagation updates. Features are concatenated into a wide first layer, followed by several layers of fully connected Rec- tified Linear Units (ReLU) [6]. Figure 3 shows the general network architecture with additional non-video watch fea- tures described below. 3.3 Heterogeneous Signals A key advantage of using deep neural networks as a gener- alization of matrix factorization is that arbitrary continuous and categorical features can be easily added to the model. Search history is treated similarly to watch history - each query is tokenized into unigrams and bigrams and each to- ken is embedded. Once averaged, the user’s tokenized, em- bedded queries represent a summarized dense search history. Demographic features are important for providing priors so that the recommendations behave reasonably for new users. The user’s geographic region and device are embedded and concatenated. Simple binary and continuous features such as the user’s gender, logged-in state and age are input di- rectly into the network as real values normalized to [0, 1]. “Example Age” Feature Many hours worth of videos are uploaded each second to YouTube. Recommending this recently uploaded (“fresh”) content is extremely important for YouTube as a product. We consistently observe that users prefer fresh content, though not at the expense of relevance. In addition to the first-order effect of simply recommending new videos that users want to watch, there is a critical secondary phenomenon of boot- strapping and propagating viral content [11]. Machine learning systems often exhibit an implicit bias towards the past because they are trained to predict future behavior from historical examples. The distribution of video popularity is highly non-stationary but the multinomial dis- tribution over the corpus produced by our recommender will reflect the average watch likelihood in the training window of several weeks. To correct for this, we feed the age of the training example as a feature during training. At serving time, this feature is set to zero (or slightly negative) to re- flect that the model is making predictions at the very end of the training window. Figure 4 demonstrates the efficacy of this approach on an arbitrarily chosen video [26]. 0.008 0.007 0.006 0.005 0.004 0.003 0.002 0.001 0.000 −30 −20 −10 0 10 20 Days Since Upload 30 40 Baseline Model With Example Age Empirical Distributi on Figure 4: For a given video [26], the model trained with example age as a feature is able to accurately represent the upload time and time-dependant pop- ularity observed in the data. Without the feature, the model would predict approximately the average likelihood over the training window. 3.4 Label and Context Selection It is important to emphasize that recommendation often involves solving a surrogate problem and transferring the result to a particular context. A classic example is the as- sumption that accurately predicting ratings leads to effective movie recommendations [2]. We have found that the choice of this surrogate learning problem has an outsized impor- tance on performance in A/B testing but is very difficult to measure with offline experiments. Training examples are generated from all YouTube watches (even those embedded on other sites) rather than just watches on the recommendations we produce. Otherwise, it would be very difficult for new content to surface and the recom- mender would be overly biased towards exploitation. If users are discovering videos through means other than our recom- mendations, we want to be able to quickly propagate this discovery to others via collaborative filtering. Another key insight that improved live metrics was to generate a fixed number of training examples per user, effectively weighting our users equally in the loss function. This prevented a small cohort of highly active users from dominating the loss. Somewhat counter-intuitively, great care must be taken to withhold information from the classifier in order to pre- vent the model from exploiting the structure of the site and overfitting the surrogate problem. Consider as an example a Class ProbabilityPDF Image | Deep Neural Networks for YouTube Recommendations

PDF Search Title:

Deep Neural Networks for YouTube RecommendationsOriginal File Name Searched:

45530.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |