PDF Publication Title:

Text from PDF Page: 005

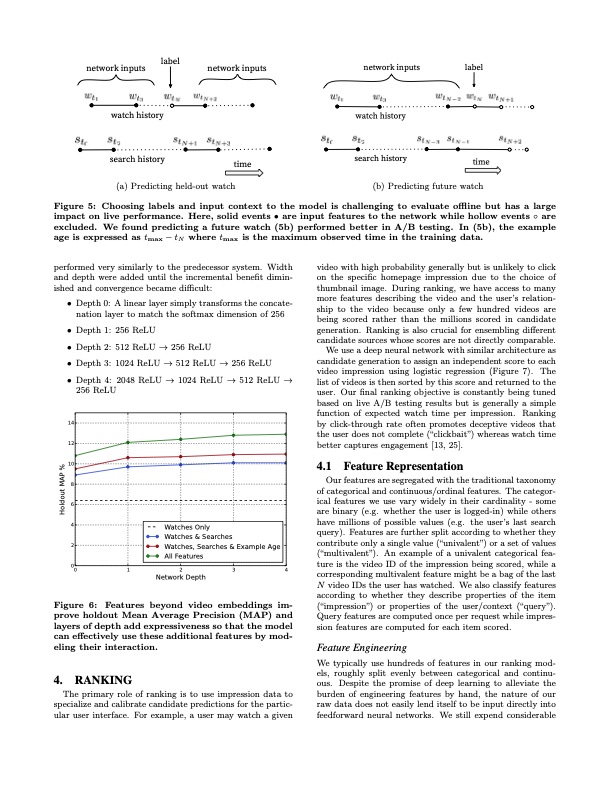

network inputs label network inputs network inputs label watch history search history time watch history search history time (a) Predicting held-out watch (b) Predicting future watch Figure 5: Choosing labels and input context to the model is challenging to evaluate offline but has a large impact on live performance. Here, solid events • are input features to the network while hollow events ◦ are excluded. We found predicting a future watch (5b) performed better in A/B testing. In (5b), the example age is expressed as tmax − tN where tmax is the maximum observed time in the training data. performed very similarly to the predecessor system. Width and depth were added until the incremental benefit dimin- ished and convergence became difficult: • Depth 0: A linear layer simply transforms the concate- nation layer to match the softmax dimension of 256 • Depth 1: 256 ReLU • Depth 2: 512 ReLU → 256 ReLU • Depth 3: 1024 ReLU → 512 ReLU → 256 ReLU • Depth 4: 2048 ReLU → 1024 ReLU → 512 ReLU → 256 ReLU video with high probability generally but is unlikely to click on the specific homepage impression due to the choice of thumbnail image. During ranking, we have access to many more features describing the video and the user’s relation- ship to the video because only a few hundred videos are being scored rather than the millions scored in candidate generation. Ranking is also crucial for ensembling different candidate sources whose scores are not directly comparable. We use a deep neural network with similar architecture as candidate generation to assign an independent score to each video impression using logistic regression (Figure 7). The list of videos is then sorted by this score and returned to the user. Our final ranking objective is constantly being tuned based on live A/B testing results but is generally a simple function of expected watch time per impression. Ranking by click-through rate often promotes deceptive videos that the user does not complete (“clickbait”) whereas watch time better captures engagement [13, 25]. 4.1 Feature Representation Our features are segregated with the traditional taxonomy of categorical and continuous/ordinal features. The categor- ical features we use vary widely in their cardinality - some are binary (e.g. whether the user is logged-in) while others have millions of possible values (e.g. the user’s last search query). Features are further split according to whether they contribute only a single value (“univalent”) or a set of values (“multivalent”). An example of a univalent categorical fea- ture is the video ID of the impression being scored, while a corresponding multivalent feature might be a bag of the last N video IDs the user has watched. We also classify features according to whether they describe properties of the item (“impression”) or properties of the user/context (“query”). Query features are computed once per request while impres- sion features are computed for each item scored. Feature Engineering We typically use hundreds of features in our ranking mod- els, roughly split evenly between categorical and continu- ous. Despite the promise of deep learning to alleviate the burden of engineering features by hand, the nature of our raw data does not easily lend itself to be input directly into feedforward neural networks. We still expend considerable Watches Only Watches & Searches Watches, Searches & Example Age All Features 14 12 10 8 6 4 2 0 01234 Network Depth Figure 6: Features beyond video embeddings im- prove holdout Mean Average Precision (MAP) and layers of depth add expressiveness so that the model can effectively use these additional features by mod- eling their interaction. 4. RANKING The primary role of ranking is to use impression data to specialize and calibrate candidate predictions for the partic- ular user interface. For example, a user may watch a given Holdout MAP %PDF Image | Deep Neural Networks for YouTube Recommendations

PDF Search Title:

Deep Neural Networks for YouTube RecommendationsOriginal File Name Searched:

45530.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |