PDF Publication Title:

Text from PDF Page: 003

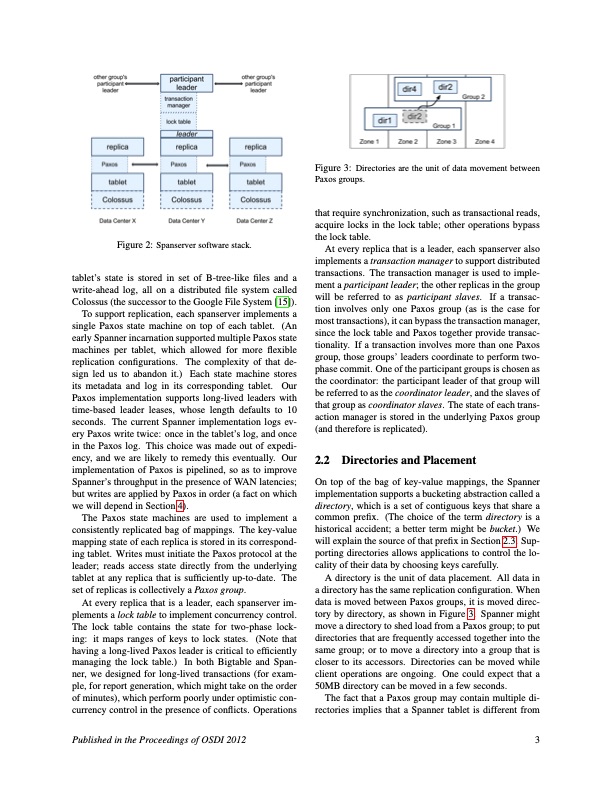

Figure 2: Spanserver software stack. tablet’s state is stored in set of B-tree-like files and a write-ahead log, all on a distributed file system called Colossus (the successor to the Google File System [15]). To support replication, each spanserver implements a single Paxos state machine on top of each tablet. (An early Spanner incarnation supported multiple Paxos state machines per tablet, which allowed for more flexible replication configurations. The complexity of that de- sign led us to abandon it.) Each state machine stores its metadata and log in its corresponding tablet. Our Paxos implementation supports long-lived leaders with time-based leader leases, whose length defaults to 10 seconds. The current Spanner implementation logs ev- ery Paxos write twice: once in the tablet’s log, and once in the Paxos log. This choice was made out of expedi- ency, and we are likely to remedy this eventually. Our implementation of Paxos is pipelined, so as to improve Spanner’s throughput in the presence of WAN latencies; but writes are applied by Paxos in order (a fact on which we will depend in Section 4). The Paxos state machines are used to implement a consistently replicated bag of mappings. The key-value mapping state of each replica is stored in its correspond- ing tablet. Writes must initiate the Paxos protocol at the leader; reads access state directly from the underlying tablet at any replica that is sufficiently up-to-date. The set of replicas is collectively a Paxos group. At every replica that is a leader, each spanserver im- plements a lock table to implement concurrency control. The lock table contains the state for two-phase lock- ing: it maps ranges of keys to lock states. (Note that having a long-lived Paxos leader is critical to efficiently managing the lock table.) In both Bigtable and Span- ner, we designed for long-lived transactions (for exam- ple, for report generation, which might take on the order of minutes), which perform poorly under optimistic con- currency control in the presence of conflicts. Operations Figure 3: Directories are the unit of data movement between Paxos groups. that require synchronization, such as transactional reads, acquire locks in the lock table; other operations bypass the lock table. At every replica that is a leader, each spanserver also implements a transaction manager to support distributed transactions. The transaction manager is used to imple- ment a participant leader; the other replicas in the group will be referred to as participant slaves. If a transac- tion involves only one Paxos group (as is the case for most transactions), it can bypass the transaction manager, since the lock table and Paxos together provide transac- tionality. If a transaction involves more than one Paxos group, those groups’ leaders coordinate to perform two- phase commit. One of the participant groups is chosen as the coordinator: the participant leader of that group will be referred to as the coordinator leader, and the slaves of that group as coordinator slaves. The state of each trans- action manager is stored in the underlying Paxos group (and therefore is replicated). 2.2 Directories and Placement On top of the bag of key-value mappings, the Spanner implementation supports a bucketing abstraction called a directory, which is a set of contiguous keys that share a common prefix. (The choice of the term directory is a historical accident; a better term might be bucket.) We will explain the source of that prefix in Section 2.3. Sup- porting directories allows applications to control the lo- cality of their data by choosing keys carefully. A directory is the unit of data placement. All data in a directory has the same replication configuration. When data is moved between Paxos groups, it is moved direc- tory by directory, as shown in Figure 3. Spanner might move a directory to shed load from a Paxos group; to put directories that are frequently accessed together into the same group; or to move a directory into a group that is closer to its accessors. Directories can be moved while client operations are ongoing. One could expect that a 50MB directory can be moved in a few seconds. The fact that a Paxos group may contain multiple di- rectories implies that a Spanner tablet is different from Published in the Proceedings of OSDI 2012 3PDF Image | Google Globally-Distributed Database

PDF Search Title:

Google Globally-Distributed DatabaseOriginal File Name Searched:

spanner-osdi2012.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |