PDF Publication Title:

Text from PDF Page: 080

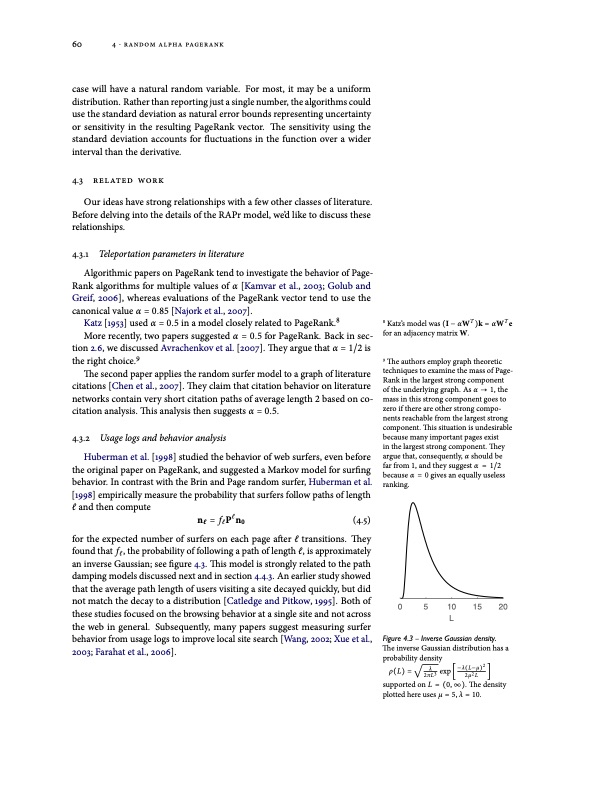

60 4 ⋅ random alpha pagerank case will have a natural random variable. For most, it may be a uniform distribution. Rather than reporting just a single number, the algorithms could use the standard deviation as natural error bounds representing uncertainty or sensitivity in the resulting PageRank vector. The sensitivity using the standard deviation accounts for fluctuations in the function over a wider interval than the derivative. 4.3 related work Our ideas have strong relationships with a few other classes of literature. Before delving into the details of the RAPr model, we’d like to discuss these relationships. 4.3.1 Teleportation parameters in literature Algorithmic papers on PageRank tend to investigate the behavior of Page- Rank algorithms for multiple values of α [Kamvar et al., 2003; Golub and Greif, 2006], whereas evaluations of the PageRank vector tend to use the canonical value α = 0.85 [Najork et al., 2007]. Katz [1953] used α = 0.5 in a model closely related to PageRank.8 More recently, two papers suggested α = 0.5 for PageRank. Back in sec- tion 2.6, we discussed Avrachenkov et al. [2007]. They argue that α = 1/2 is the right choice.9 The second paper applies the random surfer model to a graph of literature citations [Chen et al., 2007]. They claim that citation behavior on literature networks contain very short citation paths of average length 2 based on co- citation analysis. This analysis then suggests α = 0.5. 4.3.2 Usage logs and behavior analysis Huberman et al. [1998] studied the behavior of web surfers, even before the original paper on PageRank, and suggested a Markov model for surfing behavior. In contrast with the Brin and Page random surfer, Huberman et al. [1998] empirically measure the probability that surfers follow paths of length l and then compute n = f Pln (4.5) ðl0 for the expected number of surfers on each page after l transitions. They found that fl, the probability of following a path of length l, is approximately an inverse Gaussian; see figure 4.3. This model is strongly related to the path damping models discussed next and in section 4.4.3. An earlier study showed that the average path length of users visiting a site decayed quickly, but did not match the decay to a distribution [Catledge and Pitkow, 1995]. Both of these studies focused on the browsing behavior at a single site and not across the web in general. Subsequently, many papers suggest measuring surfer behavior from usage logs to improve local site search [Wang, 2002; Xue et al., 2003; Farahat et al., 2006]. 8 Katz’s model was (I − αWT )k = αWT e for an adjacency matrix W. 9 The authors employ graph theoretic techniques to examine the mass of Page- Rank in the largest strong component of the underlying graph. As α → 1, the mass in this strong component goes to zero if there are other strong compo- nents reachable from the largest strong component. This situation is undesirable because many important pages exist in the largest strong component. They argue that, consequently, α should be far from 1, and they suggest α = 1/2 because α = 0 gives an equally useless ranking. 0 5 10 15 20 L Figure 4.3 – Inverse Gaussian density. The inverse Gaussian distribution has a probability density √2 ρ(L) = λ exp[−λ(L−μ) ] 2πL3 2μ2 L supported on L = (0, ∞). The density plotted here uses μ = 5, λ = 10. densityPDF Image | MODELS AND ALGORITHMS FOR PAGERANK SENSITIVITY

PDF Search Title:

MODELS AND ALGORITHMS FOR PAGERANK SENSITIVITYOriginal File Name Searched:

gleich-pagerank-thesis.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |