PDF Publication Title:

Text from PDF Page: 135

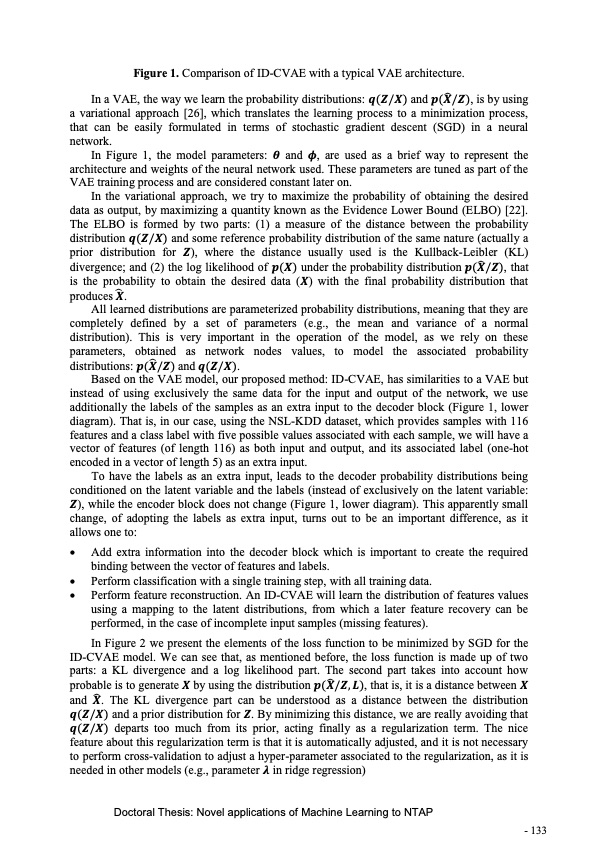

Figure 1. Comparison of ID-CVAE with a typical VAE architecture. ̂ In a VAE, the way we learn the probability distributions: 𝒒(𝒁/𝑿) and 𝒑(𝑿/𝒁), is by using a variational approach [26], which translates the learning process to a minimization process, that can be easily formulated in terms of stochastic gradient descent (SGD) in a neural network. In Figure 1, the model parameters: 𝜽 and 𝝓, are used as a brief way to represent the architecture and weights of the neural network used. These parameters are tuned as part of the VAE training process and are considered constant later on. In the variational approach, we try to maximize the probability of obtaining the desired data as output, by maximizing a quantity known as the Evidence Lower Bound (ELBO) [22]. The ELBO is formed by two parts: (1) a measure of the distance between the probability distribution 𝒒(𝒁/𝑿) and some reference probability distribution of the same nature (actually a prior distribution for 𝒁), where the distance usually used is the Kullback-Leibler (KL) ̂ divergence; and (2) the log likelihood of 𝒑(𝑿) under the probability distribution𝒑(𝑿/𝒁), that is the probability to obtain the desired data (𝑿) with the final probability distribution that ̂ produces𝑿. All learned distributions are parameterized probability distributions, meaning that they are completely defined by a set of parameters (e.g., the mean and variance of a normal distribution). This is very important in the operation of the model, as we rely on these parameters, obtained as network nodes values, to model the associated probability ̂ distributions: 𝒑(𝑿/𝒁)and𝒒(𝒁/𝑿). Based on the VAE model, our proposed method: ID-CVAE, has similarities to a VAE but instead of using exclusively the same data for the input and output of the network, we use additionally the labels of the samples as an extra input to the decoder block (Figure 1, lower diagram). That is, in our case, using the NSL-KDD dataset, which provides samples with 116 features and a class label with five possible values associated with each sample, we will have a vector of features (of length 116) as both input and output, and its associated label (one-hot encoded in a vector of length 5) as an extra input. To have the labels as an extra input, leads to the decoder probability distributions being conditioned on the latent variable and the labels (instead of exclusively on the latent variable: 𝒁), while the encoder block does not change (Figure 1, lower diagram). This apparently small change, of adopting the labels as extra input, turns out to be an important difference, as it allows one to: • Add extra information into the decoder block which is important to create the required binding between the vector of features and labels. • Perform classification with a single training step, with all training data. • Perform feature reconstruction. An ID-CVAE will learn the distribution of features values using a mapping to the latent distributions, from which a later feature recovery can be performed, in the case of incomplete input samples (missing features). In Figure 2 we present the elements of the loss function to be minimized by SGD for the ID-CVAE model. We can see that, as mentioned before, the loss function is made up of two parts: a KL divergence and a log likelihood part. The second part takes into account how ̂ probable is to generate 𝑿 by using the distribution 𝒑(𝑿/𝒁, 𝑳), that is, it is a distance between 𝑿 ̂ and 𝑿. The KL divergence part can be understood as a distance between the distribution 𝒒(𝒁/𝑿) and a prior distribution for 𝒁. By minimizing this distance, we are really avoiding that 𝒒(𝒁/𝑿) departs too much from its prior, acting finally as a regularization term. The nice feature about this regularization term is that it is automatically adjusted, and it is not necessary to perform cross-validation to adjust a hyper-parameter associated to the regularization, as it is needed in other models (e.g., parameter 𝝀 in ridge regression) Doctoral Thesis: Novel applications of Machine Learning to NTAP - 133PDF Image | Novel applications of Machine Learning to Network Traffic Analysis

PDF Search Title:

Novel applications of Machine Learning to Network Traffic AnalysisOriginal File Name Searched:

456453_1175348.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |