PDF Publication Title:

Text from PDF Page: 011

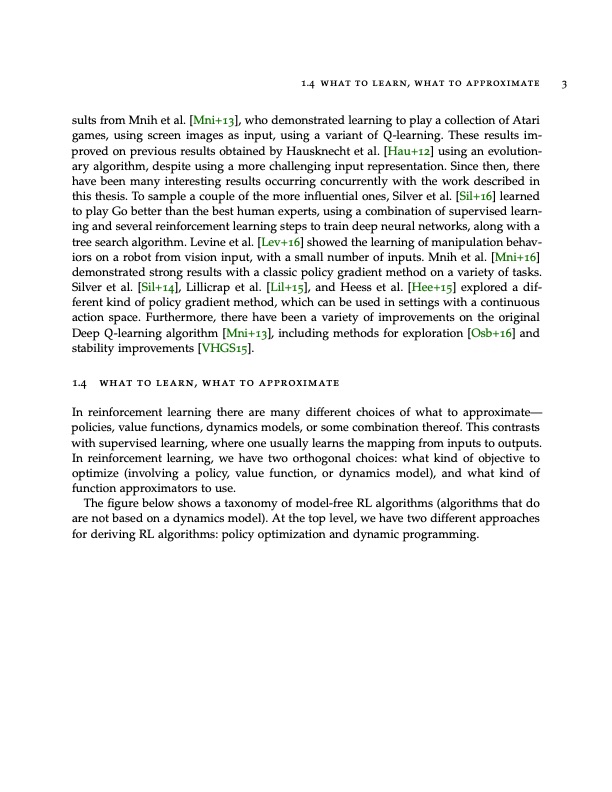

1.4 what to learn, what to approximate 3 sults from Mnih et al. [Mni+13], who demonstrated learning to play a collection of Atari games, using screen images as input, using a variant of Q-learning. These results im- proved on previous results obtained by Hausknecht et al. [Hau+12] using an evolution- ary algorithm, despite using a more challenging input representation. Since then, there have been many interesting results occurring concurrently with the work described in this thesis. To sample a couple of the more influential ones, Silver et al. [Sil+16] learned to play Go better than the best human experts, using a combination of supervised learn- ing and several reinforcement learning steps to train deep neural networks, along with a tree search algorithm. Levine et al. [Lev+16] showed the learning of manipulation behav- iors on a robot from vision input, with a small number of inputs. Mnih et al. [Mni+16] demonstrated strong results with a classic policy gradient method on a variety of tasks. Silver et al. [Sil+14], Lillicrap et al. [Lil+15], and Heess et al. [Hee+15] explored a dif- ferent kind of policy gradient method, which can be used in settings with a continuous action space. Furthermore, there have been a variety of improvements on the original Deep Q-learning algorithm [Mni+13], including methods for exploration [Osb+16] and stability improvements [VHGS15]. 1.4 what to learn, what to approximate In reinforcement learning there are many different choices of what to approximate— policies, value functions, dynamics models, or some combination thereof. This contrasts with supervised learning, where one usually learns the mapping from inputs to outputs. In reinforcement learning, we have two orthogonal choices: what kind of objective to optimize (involving a policy, value function, or dynamics model), and what kind of function approximators to use. The figure below shows a taxonomy of model-free RL algorithms (algorithms that do are not based on a dynamics model). At the top level, we have two different approaches for deriving RL algorithms: policy optimization and dynamic programming.PDF Image | OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHS

PDF Search Title:

OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHSOriginal File Name Searched:

thesis-optimizing-deep-learning.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |