PDF Publication Title:

Text from PDF Page: 017

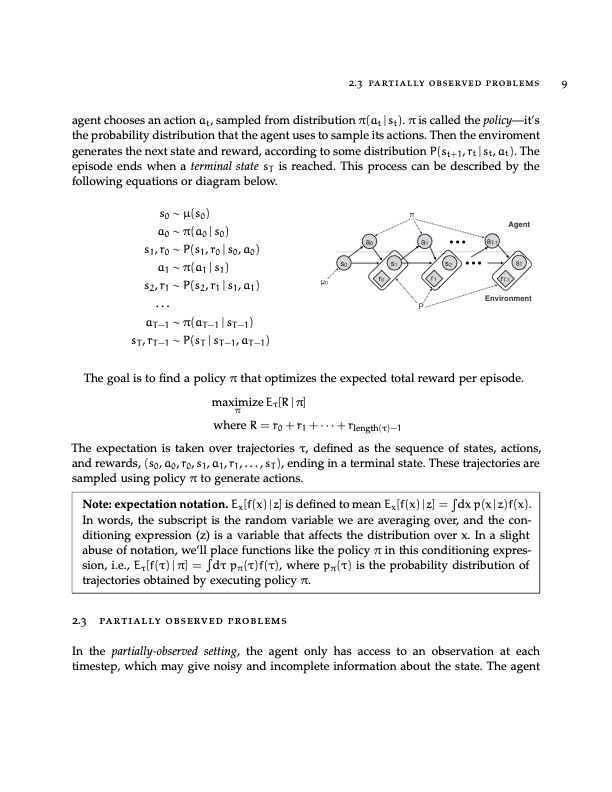

2.3 partially observed problems 9 agent chooses an action at, sampled from distribution π(at | st). π is called the policy—it’s the probability distribution that the agent uses to sample its actions. Then the enviroment generates the next state and reward, according to some distribution P(st+1, rt | st, at). The episode ends when a terminal state sT is reached. This process can be described by the following equations or diagram below. s0 ∼ μ(s0) a0 ∼ π(a0 | s0) s1,r0 ∼P(s1,r0|s0,a0) a1 ∼ π(a1 | s1) s2,r1 ∼P(s2,r1|s1,a1) ... aT−1 ∼π(aT−1|sT−1) sT,rT−1 ∼ P(sT |sT−1,aT−1) The goal is to find a policy π that optimizes the expected total reward per episode. maximize Eτ[R | π] π where R = r0 +r1 +···+rlength(τ)−1 The expectation is taken over trajectories τ, defined as the sequence of states, actions, and rewards, (s0, a0, r0, s1, a1, r1, . . . , sT ), ending in a terminal state. These trajectories are sampled using policy π to generate actions. π a0 a1 aT-1 Agent μ0 r0 s0 s1 s2 sT r1 rT-1 Environment P Note: expectation notation. Ex[f(x) | z] is defined to mean Ex[f(x) | z] = dx p(x | z)f(x). In words, the subscript is the random variable we are averaging over, and the con- ditioning expression (z) is a variable that affects the distribution over x. In a slight abuse of notation, we’ll place functions like the policy π in this conditioning expres- sion, i.e., Eτ[f(τ) | π] = dτ pπ(τ)f(τ), where pπ(τ) is the probability distribution of trajectories obtained by executing policy π. 2.3 partially observed problems In the partially-observed setting, the agent only has access to an observation at each timestep, which may give noisy and incomplete information about the state. The agentPDF Image | OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHS

PDF Search Title:

OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHSOriginal File Name Searched:

thesis-optimizing-deep-learning.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |