PDF Publication Title:

Text from PDF Page: 019

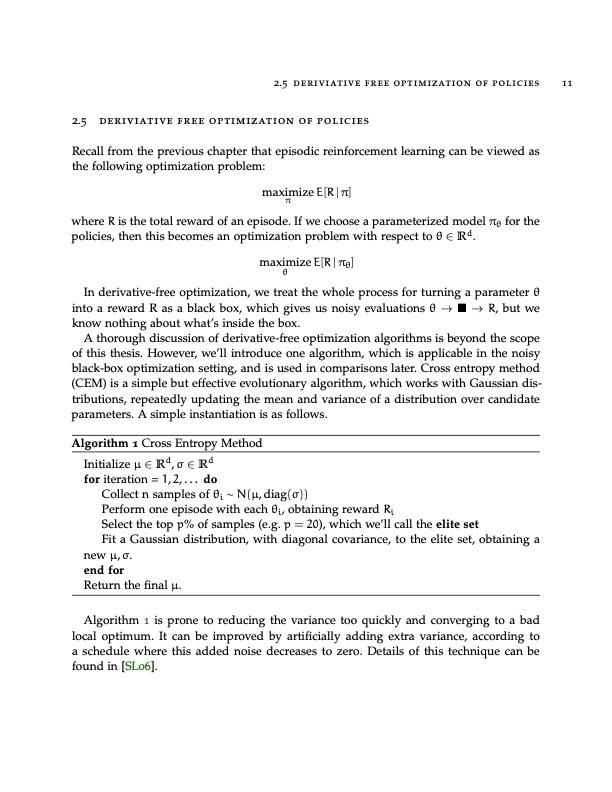

2.5 deriviative free optimization of policies 11 2.5 deriviative free optimization of policies Recall from the previous chapter that episodic reinforcement learning can be viewed as the following optimization problem: maximize E[R | π] π where R is the total reward of an episode. If we choose a parameterized model πθ for the policies, then this becomes an optimization problem with respect to θ ∈ Rd. maximize E[R | πθ] θ In derivative-free optimization, we treat the whole process for turning a parameter θ into a reward R as a black box, which gives us noisy evaluations θ → → R, but we know nothing about what’s inside the box. A thorough discussion of derivative-free optimization algorithms is beyond the scope of this thesis. However, we’ll introduce one algorithm, which is applicable in the noisy black-box optimization setting, and is used in comparisons later. Cross entropy method (CEM) is a simple but effective evolutionary algorithm, which works with Gaussian dis- tributions, repeatedly updating the mean and variance of a distribution over candidate parameters. A simple instantiation is as follows. Algorithm 1 Cross Entropy Method Initialize μ ∈ Rd, σ ∈ Rd for iteration = 1,2,... do Collect n samples of θi ∼ N(μ, diag(σ)) Perform one episode with each θi, obtaining reward Ri Select the top p% of samples (e.g. p = 20), which we’ll call the elite set Fit a Gaussian distribution, with diagonal covariance, to the elite set, obtaining a new μ, σ. end for Return the final μ. Algorithm 1 is prone to reducing the variance too quickly and converging to a bad local optimum. It can be improved by artificially adding extra variance, according to a schedule where this added noise decreases to zero. Details of this technique can be found in [SL06].PDF Image | OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHS

PDF Search Title:

OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHSOriginal File Name Searched:

thesis-optimizing-deep-learning.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |