PDF Publication Title:

Text from PDF Page: 055

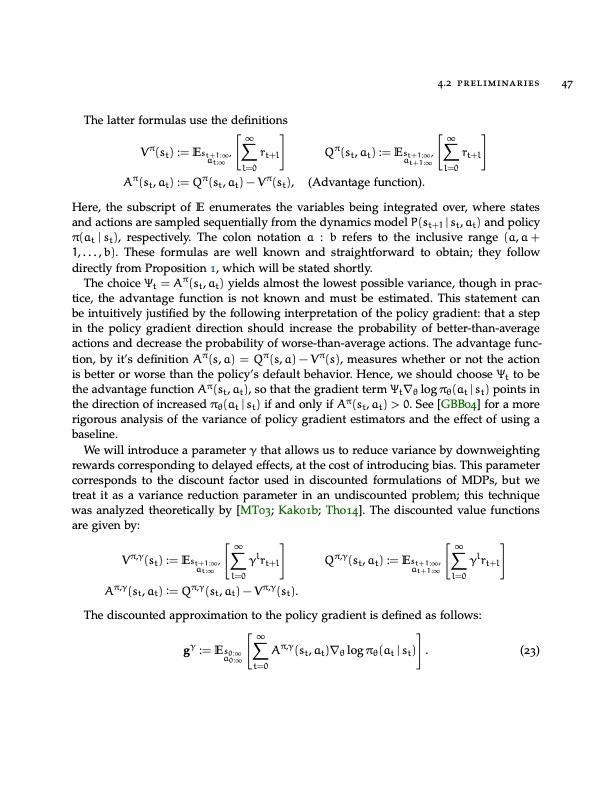

The latter formulas use the definitions 4.2 preliminaries 47 ∞ rt+l l=0 ∞ Vπ(st) := Est+1:∞, rt+l at:∞ l=0 Aπ(st, at) := Qπ(st, at) − Vπ(st), Qπ(st, at) := Est+1:∞, at+1:∞ (Advantage function). Here, the subscript of E enumerates the variables being integrated over, where states and actions are sampled sequentially from the dynamics model P(st+1 | st, at) and policy π(at | st), respectively. The colon notation a : b refers to the inclusive range (a, a + 1,...,b). These formulas are well known and straightforward to obtain; they follow directly from Proposition 1, which will be stated shortly. The choice Ψt = Aπ(st, at) yields almost the lowest possible variance, though in prac- tice, the advantage function is not known and must be estimated. This statement can be intuitively justified by the following interpretation of the policy gradient: that a step in the policy gradient direction should increase the probability of better-than-average actions and decrease the probability of worse-than-average actions. The advantage func- tion, by it’s definition Aπ(s, a) = Qπ(s, a) − Vπ(s), measures whether or not the action is better or worse than the policy’s default behavior. Hence, we should choose Ψt to be the advantage function Aπ(st, at), so that the gradient term Ψt∇θ log πθ(at | st) points in the direction of increased πθ(at | st) if and only if Aπ(st, at) > 0. See [GBB04] for a more rigorous analysis of the variance of policy gradient estimators and the effect of using a baseline. We will introduce a parameter γ that allows us to reduce variance by downweighting rewards corresponding to delayed effects, at the cost of introducing bias. This parameter corresponds to the discount factor used in discounted formulations of MDPs, but we treat it as a variance reduction parameter in an undiscounted problem; this technique was analyzed theoretically by [MT03; Kak01b; Tho14]. The discounted value functions are given by: ∞ Vπ,γ(st) := Est+1:∞, γlrt+l ∞ at+1:∞ l=0 (23) at:∞ l=0 Aπ,γ(st, at) := Qπ,γ(st, at) − Vπ,γ(st). Qπ,γ(st, at) := Est+1:∞, γlrt+l The discounted approximation to the policy gradient is defined as follows: gγ := Es0:∞ a0:∞ . ∞ t=0 Aπ,γ(st, at)∇θ log πθ(at | st) PDF Image | OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHS

PDF Search Title:

OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHSOriginal File Name Searched:

thesis-optimizing-deep-learning.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |