PDF Publication Title:

Text from PDF Page: 080

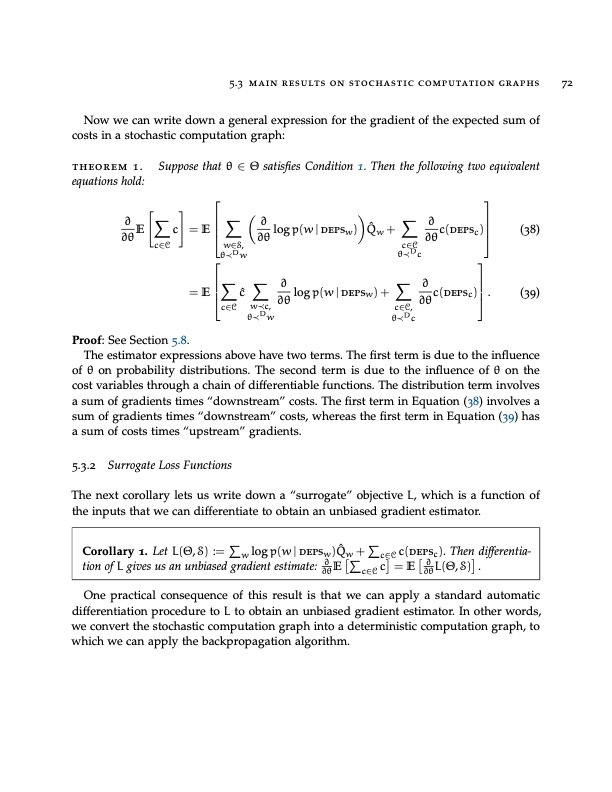

5.3 main results on stochastic computation graphs 72 Now we can write down a general expression for the gradient of the expected sum of costs in a stochastic computation graph: theorem 1. Suppose that θ ∈ Θ satisfies Condition 1. Then the following two equivalent equations hold: ∂∂∂ ∂θE c =E ∂θlogp(w|depsw) Qˆw+ ∂θc(depsc) (38) c∈C c∈C w∈S, θ≺D w θ≺D c ∂ ∂ = E cˆ c∈C w≺c, θ≺D w ∂θ log p(w | depsw) + ∂θc(depsc) . (39) c∈C, θ≺D c Proof: See Section 5.8. The estimator expressions above have two terms. The first term is due to the influence of θ on probability distributions. The second term is due to the influence of θ on the cost variables through a chain of differentiable functions. The distribution term involves a sum of gradients times “downstream” costs. The first term in Equation (38) involves a sum of gradients times “downstream” costs, whereas the first term in Equation (39) has a sum of costs times “upstream” gradients. 5.3.2 Surrogate Loss Functions The next corollary lets us write down a “surrogate” objective L, which is a function of the inputs that we can differentiate to obtain an unbiased gradient estimator. One practical consequence of this result is that we can apply a standard automatic differentiation procedure to L to obtain an unbiased gradient estimator. In other words, we convert the stochastic computation graph into a deterministic computation graph, to which we can apply the backpropagation algorithm. Corollary 1. Let L(Θ, S) := log p(w | depsw)Qˆ w + c(depsc). Then differentia- w c∈C tion of L gives us an unbiased gradient estimate: ∂ E c = E ∂ L(Θ, S) . ∂θ c∈C ∂θPDF Image | OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHS

PDF Search Title:

OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHSOriginal File Name Searched:

thesis-optimizing-deep-learning.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |