PDF Publication Title:

Text from PDF Page: 097

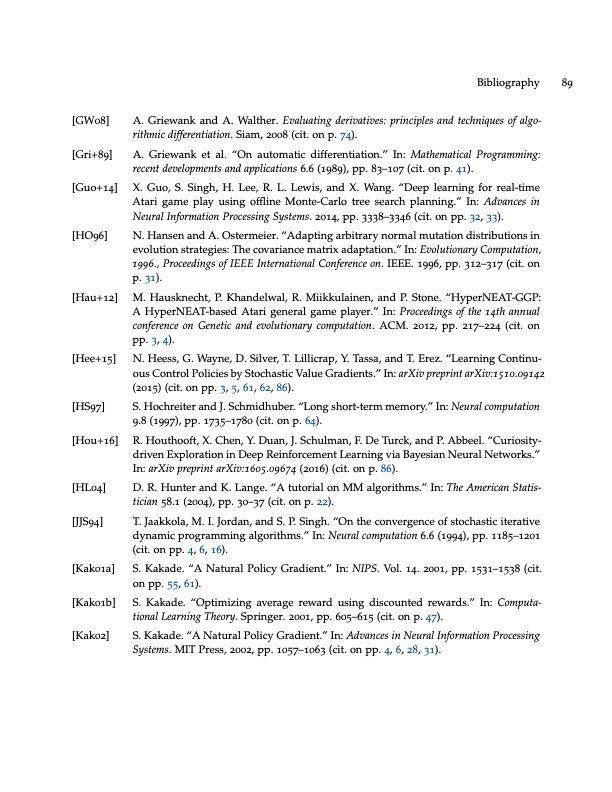

[GW08] [Gri+89] [Guo+14] [HO96] [Hau+12] [Hee+15] [HS97] [Hou+16] [HL04] [JJS94] [Kak01a] [Kak01b] [Kak02] Bibliography 89 A. Griewank and A. Walther. Evaluating derivatives: principles and techniques of algo- rithmic differentiation. Siam, 2008 (cit. on p. 74). A. Griewank et al. “On automatic differentiation.” In: Mathematical Programming: recent developments and applications 6.6 (1989), pp. 83–107 (cit. on p. 41). X. Guo, S. Singh, H. Lee, R. L. Lewis, and X. Wang. “Deep learning for real-time Atari game play using offline Monte-Carlo tree search planning.” In: Advances in Neural Information Processing Systems. 2014, pp. 3338–3346 (cit. on pp. 32, 33). N. Hansen and A. Ostermeier. “Adapting arbitrary normal mutation distributions in evolution strategies: The covariance matrix adaptation.” In: Evolutionary Computation, 1996., Proceedings of IEEE International Conference on. IEEE. 1996, pp. 312–317 (cit. on p. 31). M. Hausknecht, P. Khandelwal, R. Miikkulainen, and P. Stone. “HyperNEAT-GGP: A HyperNEAT-based Atari general game player.” In: Proceedings of the 14th annual conference on Genetic and evolutionary computation. ACM. 2012, pp. 217–224 (cit. on pp. 3, 4). N. Heess, G. Wayne, D. Silver, T. Lillicrap, Y. Tassa, and T. Erez. “Learning Continu- ous Control Policies by Stochastic Value Gradients.” In: arXiv preprint arXiv:1510.09142 (2015) (cit. on pp. 3, 5, 61, 62, 86). S. Hochreiter and J. Schmidhuber. “Long short-term memory.” In: Neural computation 9.8 (1997), pp. 1735–1780 (cit. on p. 64). R. Houthooft, X. Chen, Y. Duan, J. Schulman, F. De Turck, and P. Abbeel. “Curiosity- driven Exploration in Deep Reinforcement Learning via Bayesian Neural Networks.” In: arXiv preprint arXiv:1605.09674 (2016) (cit. on p. 86). D. R. Hunter and K. Lange. “A tutorial on MM algorithms.” In: The American Statis- tician 58.1 (2004), pp. 30–37 (cit. on p. 22). T. Jaakkola, M. I. Jordan, and S. P. Singh. “On the convergence of stochastic iterative dynamic programming algorithms.” In: Neural computation 6.6 (1994), pp. 1185–1201 (cit. on pp. 4, 6, 16). S. Kakade. “A Natural Policy Gradient.” In: NIPS. Vol. 14. 2001, pp. 1531–1538 (cit. on pp. 55, 61). S. Kakade. “Optimizing average reward using discounted rewards.” In: Computa- tional Learning Theory. Springer. 2001, pp. 605–615 (cit. on p. 47). S. Kakade. “A Natural Policy Gradient.” In: Advances in Neural Information Processing Systems. MIT Press, 2002, pp. 1057–1063 (cit. on pp. 4, 6, 28, 31).PDF Image | OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHS

PDF Search Title:

OPTIMIZING EXPECTATIONS: FROM DEEP REINFORCEMENT LEARNING TO STOCHASTIC COMPUTATION GRAPHSOriginal File Name Searched:

thesis-optimizing-deep-learning.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |