PDF Publication Title:

Text from PDF Page: 010

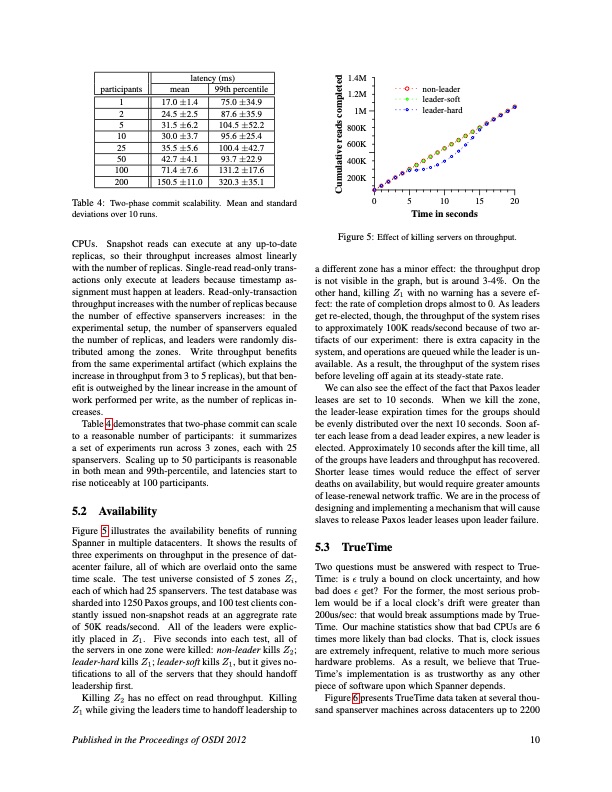

mean non-leader leader-soft leader-hard 0 5 10 15 20 Time in seconds latency (ms) 99th percentile 1.4M 1.2M 1M 800K 600K 400K 200K participants 1 17.0 ±1.4 2 24.5 ±2.5 5 31.5 ±6.2 10 30.0 ±3.7 25 35.5 ±5.6 50 42.7 ±4.1 100 71.4 ±7.6 200 150.5 ±11.0 75.0 ±34.9 87.6 ±35.9 104.5 ±52.2 95.6 ±25.4 100.4 ±42.7 93.7 ±22.9 131.2 ±17.6 320.3 ±35.1 Table 4: Two-phase commit scalability. Mean and standard deviations over 10 runs. CPUs. Snapshot reads can execute at any up-to-date replicas, so their throughput increases almost linearly with the number of replicas. Single-read read-only trans- actions only execute at leaders because timestamp as- signment must happen at leaders. Read-only-transaction throughput increases with the number of replicas because the number of effective spanservers increases: in the experimental setup, the number of spanservers equaled the number of replicas, and leaders were randomly dis- tributed among the zones. Write throughput benefits from the same experimental artifact (which explains the increase in throughput from 3 to 5 replicas), but that ben- efit is outweighed by the linear increase in the amount of work performed per write, as the number of replicas in- creases. Table 4 demonstrates that two-phase commit can scale to a reasonable number of participants: it summarizes a set of experiments run across 3 zones, each with 25 spanservers. Scaling up to 50 participants is reasonable in both mean and 99th-percentile, and latencies start to rise noticeably at 100 participants. 5.2 Availability Figure 5 illustrates the availability benefits of running Spanner in multiple datacenters. It shows the results of three experiments on throughput in the presence of dat- acenter failure, all of which are overlaid onto the same time scale. The test universe consisted of 5 zones Zi, each of which had 25 spanservers. The test database was sharded into 1250 Paxos groups, and 100 test clients con- stantly issued non-snapshot reads at an aggregrate rate of 50K reads/second. All of the leaders were explic- itly placed in Z1. Five seconds into each test, all of the servers in one zone were killed: non-leader kills Z2; leader-hard kills Z1 ; leader-soft kills Z1 , but it gives no- tifications to all of the servers that they should handoff leadership first. Killing Z2 has no effect on read throughput. Killing Z1 while giving the leaders time to handoff leadership to Figure 5: Effect of killing servers on throughput. a different zone has a minor effect: the throughput drop is not visible in the graph, but is around 3-4%. On the other hand, killing Z1 with no warning has a severe ef- fect: the rate of completion drops almost to 0. As leaders get re-elected, though, the throughput of the system rises to approximately 100K reads/second because of two ar- tifacts of our experiment: there is extra capacity in the system, and operations are queued while the leader is un- available. As a result, the throughput of the system rises before leveling off again at its steady-state rate. We can also see the effect of the fact that Paxos leader leases are set to 10 seconds. When we kill the zone, the leader-lease expiration times for the groups should be evenly distributed over the next 10 seconds. Soon af- ter each lease from a dead leader expires, a new leader is elected. Approximately 10 seconds after the kill time, all of the groups have leaders and throughput has recovered. Shorter lease times would reduce the effect of server deaths on availability, but would require greater amounts of lease-renewal network traffic. We are in the process of designing and implementing a mechanism that will cause slaves to release Paxos leader leases upon leader failure. 5.3 TrueTime Two questions must be answered with respect to True- Time: is ε truly a bound on clock uncertainty, and how bad does ε get? For the former, the most serious prob- lem would be if a local clock’s drift were greater than 200us/sec: that would break assumptions made by True- Time. Our machine statistics show that bad CPUs are 6 times more likely than bad clocks. That is, clock issues are extremely infrequent, relative to much more serious hardware problems. As a result, we believe that True- Time’s implementation is as trustworthy as any other piece of software upon which Spanner depends. Figure 6 presents TrueTime data taken at several thou- sand spanserver machines across datacenters up to 2200 Published in the Proceedings of OSDI 2012 10 Cumulative reads completedPDF Image | Google Globally-Distributed Database

PDF Search Title:

Google Globally-Distributed DatabaseOriginal File Name Searched:

spanner-osdi2012.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |