PDF Publication Title:

Text from PDF Page: 012

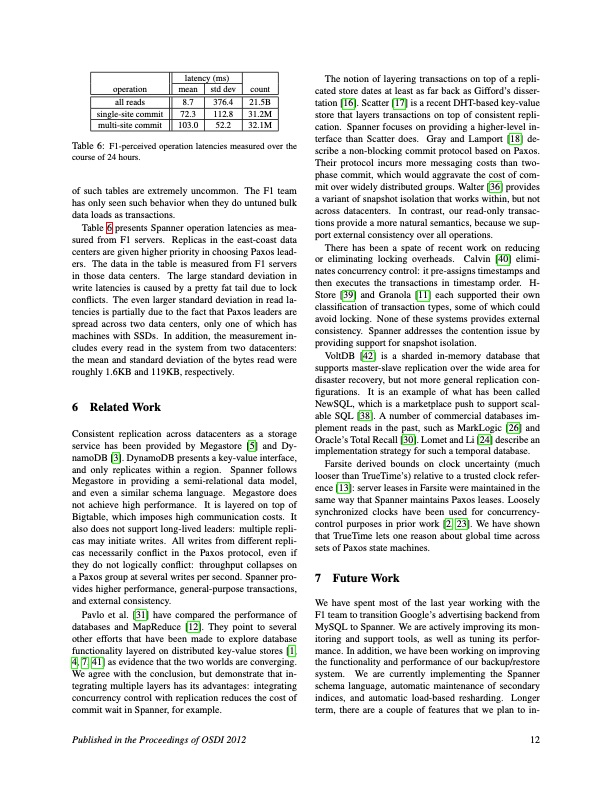

operation all reads single-site commit multi-site commit latency (ms) mean std dev 8.7 376.4 72.3 112.8 103.0 52.2 count 21.5B 31.2M 32.1M The notion of layering transactions on top of a repli- cated store dates at least as far back as Gifford’s disser- tation [16]. Scatter [17] is a recent DHT-based key-value store that layers transactions on top of consistent repli- cation. Spanner focuses on providing a higher-level in- terface than Scatter does. Gray and Lamport [18] de- scribe a non-blocking commit protocol based on Paxos. Their protocol incurs more messaging costs than two- phase commit, which would aggravate the cost of com- mit over widely distributed groups. Walter [36] provides a variant of snapshot isolation that works within, but not across datacenters. In contrast, our read-only transac- tions provide a more natural semantics, because we sup- port external consistency over all operations. There has been a spate of recent work on reducing or eliminating locking overheads. Calvin [40] elimi- nates concurrency control: it pre-assigns timestamps and then executes the transactions in timestamp order. H- Store [39] and Granola [11] each supported their own classification of transaction types, some of which could avoid locking. None of these systems provides external consistency. Spanner addresses the contention issue by providing support for snapshot isolation. VoltDB [42] is a sharded in-memory database that supports master-slave replication over the wide area for disaster recovery, but not more general replication con- figurations. It is an example of what has been called NewSQL, which is a marketplace push to support scal- able SQL [38]. A number of commercial databases im- plement reads in the past, such as MarkLogic [26] and Oracle’s Total Recall [30]. Lomet and Li [24] describe an implementation strategy for such a temporal database. Farsite derived bounds on clock uncertainty (much looser than TrueTime’s) relative to a trusted clock refer- ence [13]: server leases in Farsite were maintained in the same way that Spanner maintains Paxos leases. Loosely synchronized clocks have been used for concurrency- control purposes in prior work [2, 23]. We have shown that TrueTime lets one reason about global time across sets of Paxos state machines. 7 Future Work We have spent most of the last year working with the F1 team to transition Google’s advertising backend from MySQL to Spanner. We are actively improving its mon- itoring and support tools, as well as tuning its perfor- mance. In addition, we have been working on improving the functionality and performance of our backup/restore system. We are currently implementing the Spanner schema language, automatic maintenance of secondary indices, and automatic load-based resharding. Longer term, there are a couple of features that we plan to in- Table 6: F1-perceived operation latencies measured over the course of 24 hours. of such tables are extremely uncommon. The F1 team has only seen such behavior when they do untuned bulk data loads as transactions. Table 6 presents Spanner operation latencies as mea- sured from F1 servers. Replicas in the east-coast data centers are given higher priority in choosing Paxos lead- ers. The data in the table is measured from F1 servers in those data centers. The large standard deviation in write latencies is caused by a pretty fat tail due to lock conflicts. The even larger standard deviation in read la- tencies is partially due to the fact that Paxos leaders are spread across two data centers, only one of which has machines with SSDs. In addition, the measurement in- cludes every read in the system from two datacenters: the mean and standard deviation of the bytes read were roughly 1.6KB and 119KB, respectively. 6 Related Work Consistent replication across datacenters as a storage service has been provided by Megastore [5] and Dy- namoDB [3]. DynamoDB presents a key-value interface, and only replicates within a region. Spanner follows Megastore in providing a semi-relational data model, and even a similar schema language. Megastore does not achieve high performance. It is layered on top of Bigtable, which imposes high communication costs. It also does not support long-lived leaders: multiple repli- cas may initiate writes. All writes from different repli- cas necessarily conflict in the Paxos protocol, even if they do not logically conflict: throughput collapses on a Paxos group at several writes per second. Spanner pro- vides higher performance, general-purpose transactions, and external consistency. Pavlo et al. [31] have compared the performance of databases and MapReduce [12]. They point to several other efforts that have been made to explore database functionality layered on distributed key-value stores [1, 4, 7, 41] as evidence that the two worlds are converging. We agree with the conclusion, but demonstrate that in- tegrating multiple layers has its advantages: integrating concurrency control with replication reduces the cost of commit wait in Spanner, for example. Published in the Proceedings of OSDI 2012 12PDF Image | Google Globally-Distributed Database

PDF Search Title:

Google Globally-Distributed DatabaseOriginal File Name Searched:

spanner-osdi2012.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |