PDF Publication Title:

Text from PDF Page: 156

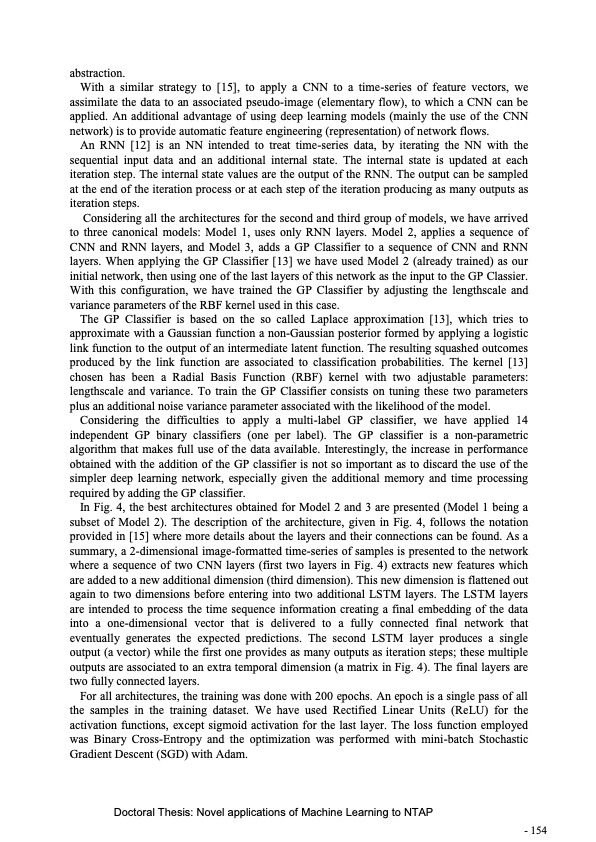

abstraction. With a similar strategy to [15], to apply a CNN to a time-series of feature vectors, we assimilate the data to an associated pseudo-image (elementary flow), to which a CNN can be applied. An additional advantage of using deep learning models (mainly the use of the CNN network) is to provide automatic feature engineering (representation) of network flows. An RNN [12] is an NN intended to treat time-series data, by iterating the NN with the sequential input data and an additional internal state. The internal state is updated at each iteration step. The internal state values are the output of the RNN. The output can be sampled at the end of the iteration process or at each step of the iteration producing as many outputs as iteration steps. Considering all the architectures for the second and third group of models, we have arrived to three canonical models: Model 1, uses only RNN layers. Model 2, applies a sequence of CNN and RNN layers, and Model 3, adds a GP Classifier to a sequence of CNN and RNN layers. When applying the GP Classifier [13] we have used Model 2 (already trained) as our initial network, then using one of the last layers of this network as the input to the GP Classier. With this configuration, we have trained the GP Classifier by adjusting the lengthscale and variance parameters of the RBF kernel used in this case. The GP Classifier is based on the so called Laplace approximation [13], which tries to approximate with a Gaussian function a non-Gaussian posterior formed by applying a logistic link function to the output of an intermediate latent function. The resulting squashed outcomes produced by the link function are associated to classification probabilities. The kernel [13] chosen has been a Radial Basis Function (RBF) kernel with two adjustable parameters: lengthscale and variance. To train the GP Classifier consists on tuning these two parameters plus an additional noise variance parameter associated with the likelihood of the model. Considering the difficulties to apply a multi-label GP classifier, we have applied 14 independent GP binary classifiers (one per label). The GP classifier is a non-parametric algorithm that makes full use of the data available. Interestingly, the increase in performance obtained with the addition of the GP classifier is not so important as to discard the use of the simpler deep learning network, especially given the additional memory and time processing required by adding the GP classifier. In Fig. 4, the best architectures obtained for Model 2 and 3 are presented (Model 1 being a subset of Model 2). The description of the architecture, given in Fig. 4, follows the notation provided in [15] where more details about the layers and their connections can be found. As a summary, a 2-dimensional image-formatted time-series of samples is presented to the network where a sequence of two CNN layers (first two layers in Fig. 4) extracts new features which are added to a new additional dimension (third dimension). This new dimension is flattened out again to two dimensions before entering into two additional LSTM layers. The LSTM layers are intended to process the time sequence information creating a final embedding of the data into a one-dimensional vector that is delivered to a fully connected final network that eventually generates the expected predictions. The second LSTM layer produces a single output (a vector) while the first one provides as many outputs as iteration steps; these multiple outputs are associated to an extra temporal dimension (a matrix in Fig. 4). The final layers are two fully connected layers. For all architectures, the training was done with 200 epochs. An epoch is a single pass of all the samples in the training dataset. We have used Rectified Linear Units (ReLU) for the activation functions, except sigmoid activation for the last layer. The loss function employed was Binary Cross-Entropy and the optimization was performed with mini-batch Stochastic Gradient Descent (SGD) with Adam. Doctoral Thesis: Novel applications of Machine Learning to NTAP - 154PDF Image | Novel applications of Machine Learning to Network Traffic Analysis

PDF Search Title:

Novel applications of Machine Learning to Network Traffic AnalysisOriginal File Name Searched:

456453_1175348.pdfDIY PDF Search: Google It | Yahoo | Bing

Cruise Ship Reviews | Luxury Resort | Jet | Yacht | and Travel Tech More Info

Cruising Review Topics and Articles More Info

Software based on Filemaker for the travel industry More Info

The Burgenstock Resort: Reviews on CruisingReview website... More Info

Resort Reviews: World Class resorts... More Info

The Riffelalp Resort: Reviews on CruisingReview website... More Info

| CONTACT TEL: 608-238-6001 Email: greg@cruisingreview.com | RSS | AMP |